Facebook has 3.06 billion active users, making it the most used social media platform worldwide. This many users means a lot of content being generated every second. People share their thoughts, pictures, updates, and sometimes negative comments and hate speech.

Therefore, Facebook has updated its content policy and community standards. According to the 2025 community standards and policy, Facebook will directly monitor such content. However, it doesn’t mean more bans; it will allow less restriction and closer monitoring.

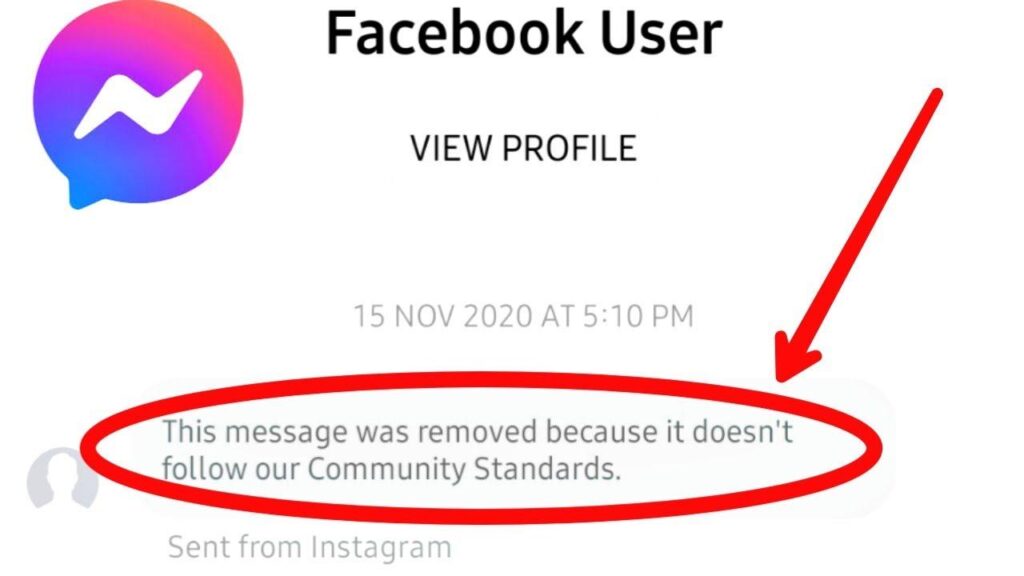

As a result, you might see a message like “This message was removed because it doesn’t follow community standards.” So, don’t worry about it, because Meta might have flagged some words or phrases in your messages as restricted. Let’s break down what this message means and whether Facebook can actually read your messages.

Can Facebook Scan Our Messages for Identifying Community Standards Violations?

Facebook claims it scans our messages in its chat app to detect and block content violating its community standards. However, it does not compromise the user’s privacy as Facebook uses an AI automated model to review messages, images, and links that contain sensitive or harmful content like child pornography, restricted words, or harassing messages.

Moreover, Facebook allows users to use various approaches to enhance their experience. The three major ones are:

Hidden Words on the Meta Chatting Platforms:

Users can choose offensive phrases, words, signs, and images to filter in the hidden folder. So, the Meta model will automatically remove messages that have those words. This is a personalized approach for every user.

Reporting Messages or Profiles:

Users can report anything that makes them uncomfortable. Facebook offers different options, such as reporting harassment or hate speech. As children use Facebook too, Facebook has introduced new child and teen protection rules.

Message Requests Permission:

Facebook Messenger has a message request option that prevents people from landing directly in your inbox. If users don’t want others even to send them a message request, they can change the permission.

What Kind of Content Can Go Against Facebook Community Standards?

There are specific community policies on Facebook, and the Facebook community will flag your messages if you violate any policies or laws. The ” This message was removed because it doesn’t follow community standards” message only appears in the following cases:

- Hate Speech: Messages that contain hate speech and offensive language can be flagged by the Facebook community. If hate speech targets any individual or group based on factors like race, identity, gender, or disability, they are removed by Facebook.

- Violent Graphics: Any messages that promote gruesome clips and self-harm videos are also against the Facebook community. These types of harmful videos can cause panic and spread insightful ideas in the audience’s minds and are also taken down by Facebook.

- Harassment Issues: The Facebook community standard tracks down those messages that contain bullying, verbal, or sexual harassment. Moreover, the Facebook community does not tolerate any cyberbullying as it creates a harmful and unsafe environment.

- Cases of Scam and Fraud: There are many people who scam others online via SMS, advertisements, or links. These fraudulent activities are removed or reported by the Facebook community to protect users from potential financial loss.

- Misleading Information: Media users spread numerous amounts of misleading information or fake news on Facebook. It can raise conspiracy theories that can cause real-world panic or harm others. Therefore, Facebook removes these messages in order not to spread any misinformation.

- Threatening Messages: Users also experience messages that contain harmful and threatening messages. That can include messages like illegal activities, violence, or blackmailing and are also removed by the Facebook community to maintain a safe environment.

- Inappropriate Content: People also receive messages that are filled with inappropriate content, like nudity and pornography. These messages are strictly prohibited by the Facebook community and are immediately removed from the page.

What Happens When Facebook Removes My Content?

When Facebook removes your content, it immediately lets you know what you posted against its community standards. Normally, this automatically appears in your feed when you log in after content removal, but you can also find it anytime in your “Support Inbox.” Facebook states in a brief description the reason for your content removal and exactly which part of the community standards you didn’t follow, enabling you to avoid it in the future.

Your account may be restricted or disabled, depending upon the policy you violated, your previous history, and the number of strikes you got for such activities. However, if you believe your content or account got removed without any violation, you are always allowed to complain about this.

How Can You Complain When Facebook Messenger Removes Your Message?

Facebook’s automated systems hide the content or remove it when it detects a violation of community standards in your account. Nonetheless, there’s always space for mistakes in this detection system when deciding to take your content down.

If you think your content was removed for no reason, you can request a review, and the human moderators will take a careful look at the matter and make a decision. Your content or account will be successfully restored if it was mistakenly removed.

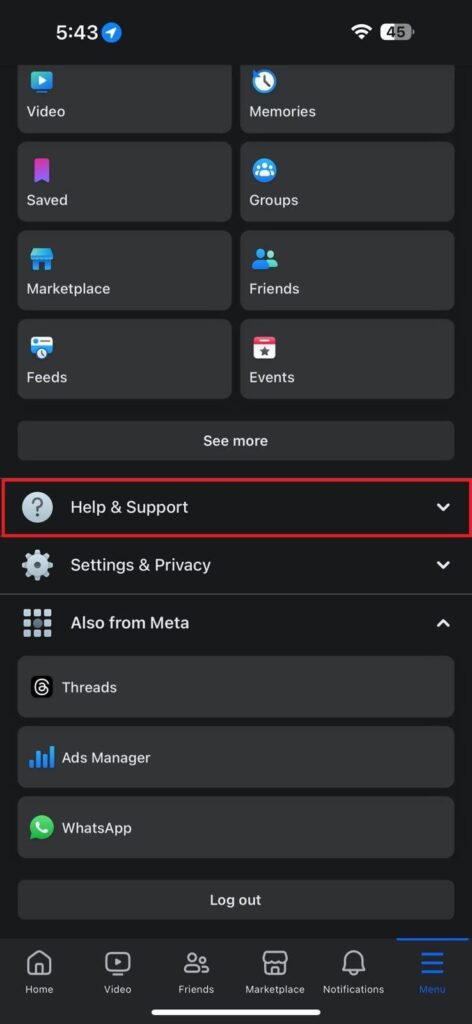

Step 1: To complain about this, first go to the “Menu” tab in the Facebook app. Then, scroll down and tap “Help and Support” to let a list of options appear.

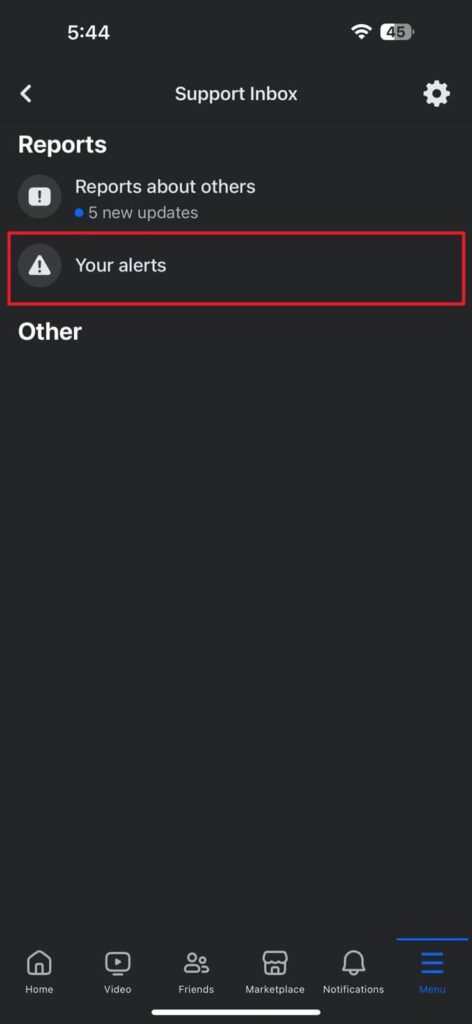

Step 2: Afterward, tap “Support Inbox” and wait for the “Reports” window to load. Finally, open the “Your Alerts” messages, choose the required messages, and tap “Disagree with Decision.” After reviewing your complaint, you will receive a notification about the results and whether your content will be restored or not.

FAQs about Facebook Community Standards

Q1. How does Facebook enforce its Community Standards?

Facebook enforces its Community Standards using automatic systems and human content reviewers. These systems utilize AI machine learning to identify and remove any content that violates any of the Facebook Community Standards. Moreover, human reviewers handle all the complex cases of reports, warnings, review appeals, and account suspensions.

Q2. Can users appeal decisions related to message content removal?

Yes, users can easily appeal decisions related to content message removal on Facebook. They can file a request on Facebook if any of their messages violates the Community Standards. Moreover, users can provide additional reasons or context to the Facebook community. This way, they might consider reevaluating their decision by notifying you about the outcome.

Q3. Does Facebook monitor content automatically or manually?

The Facebook community uses a combination of automated systems along with human reviews that enforce their Community Standards. The system identifies violent content and removes it from the page, whereas human reviewers assess more complex cases. These cases include removing threatening, offensive, inappropriate, and other content from Facebook.

Q4. What should I do after receiving abusive messages on Facebook?

The first step you should take when receiving a harassment message is to report it on Facebook. Facebook takes instant action against that message and tracks it down. Additionally, users can also block the person who sent the message to prevent further contact with the same person. These two measures can help you from receiving any unwanted and abusive messages.

Conclusion

This message reminds users of the privacy policy and community standards. Facebook’s keen eye on all content helps maintain a respectful online environment. We recommend that you regularly review community standards and policy updates to stay informed about your rights, privacy concerns, and critical alerts. You can always report incorrect removals, as well as any messages or profiles you think should be flagged. Please continue to review the safety tips that Facebook shares with its users.

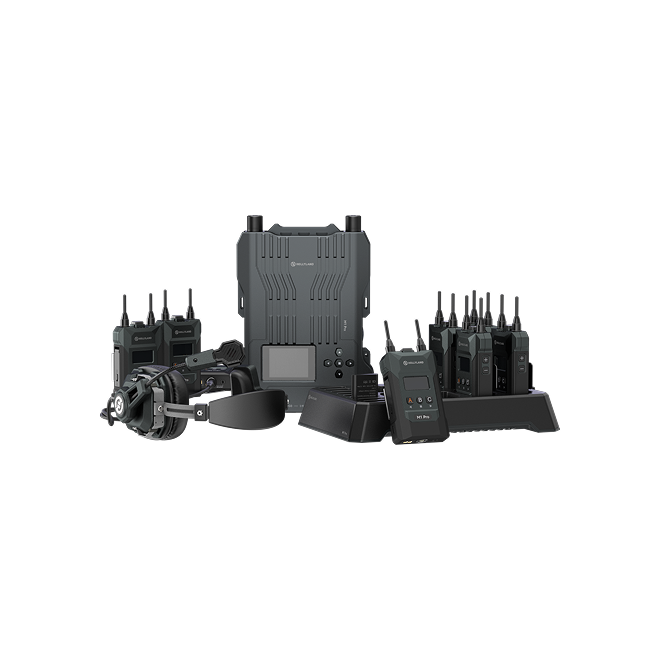

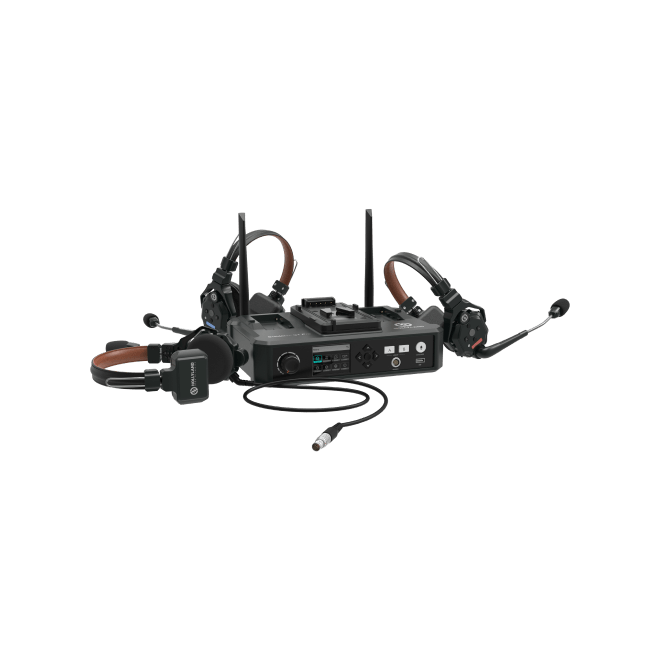

Additionally, reliable equipment, such as a streaming camera, lighting, and a microphone, can help prevent content removal. These will deliver high-quality video and sound with stable connectivity to meet platform community and content standards.

FAQs about Facebook Community Standards

Q1. How does Facebook enforce its Community Standards?

Facebook enforces its Community Standards using automatic systems and human content reviewers. These systems utilize machine learning to identify and remove content that violates Facebook’s Community Standards. Moreover, human reviewers handle all complex cases, including reports, warnings, review appeals, and account suspensions.

Q2. Can users appeal decisions related to message content removal?

Yes, users can easily appeal decisions related to content message removal on Facebook. They can file a request on Facebook if any of their messages violates the Community Standards. Moreover, users can provide additional reasons or context to the Facebook community. This way, they might reconsider their decision and notify you of the outcome.

Q3. Does Facebook automatically or manually monitor content?

The Facebook community uses a combination of automated systems and human reviews to enforce its Community Standards. The system identifies violent content and removes it from the page, whereas human reviewers assess more complex cases. These cases include eliminating content that is threatening, offensive, inappropriate, or otherwise objectionable from Facebook.

Q4. What should I do after receiving abusive messages on Facebook?

The first step you should take when receiving a harassment message is to report it on Facebook. Facebook takes instant action against that message and tracks it down. Additionally, users can block the person who sent the message to prevent further contact. These two measures can help you avoid receiving unwanted or abusive messages.

.png)

.png) Français

Français .png) Deutsch

Deutsch .png) Italiano

Italiano .png) 日本語

日本語 .png) Português

Português  Español

Español