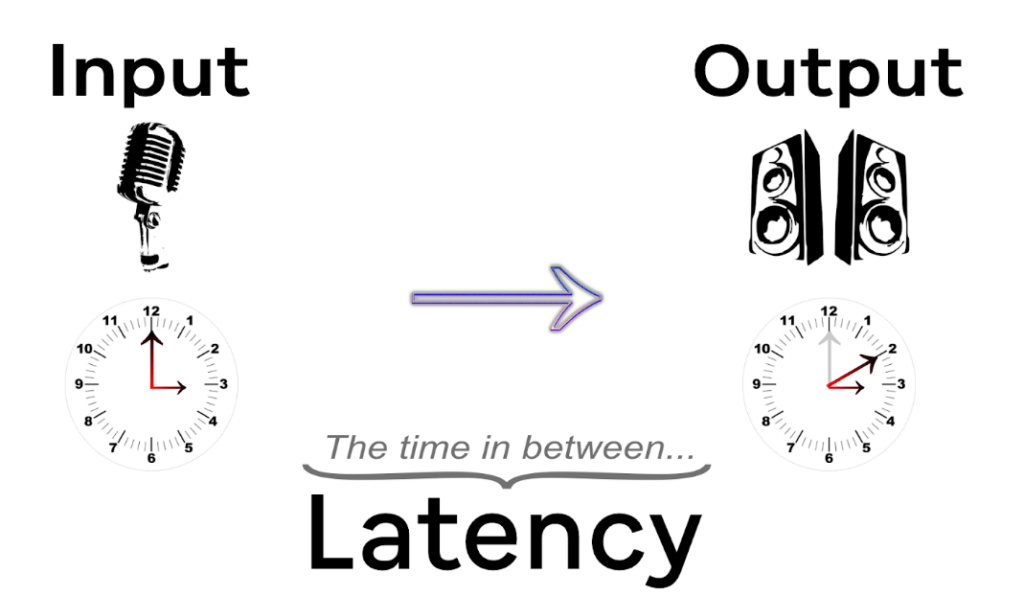

If latency seems like an alien word, you may know its synonym, ‘delay,’ in a better way. It plays a key role in the world of audio – be it live sound, studio recording, or simply enjoying music at your home through earphones or speakers. However, many people are unaware of this concept and don’t care much about latency theory until they experience an excessive time difference between the release of sound and actual listening.

So, in this article, you will learn what is latency in audio and its types. How latency can be noticed in sound? Moreover, you will explore some factors leading to latency, ways of measuring it, and 8 methods to reduce audio latency.

What is Latency in Audio? Explained

Latency in terms of audio, such as instruments and vocals recording, means the time delay between the performing artist, who is playing an instrument or singing, and the playback of the recording through headphones or studio monitors. This delay happens because when the signal enters the sound card, it doesn’t find its way to exit immediately, leading to a real-time playback inconsistency.

This latency problem gives rise to several difficulties for music producers, musicians, and engineers, as it makes it challenging to synchronize instrumentation or vocals with the rest of the track. However, there are also reasons discussed further in this article that cause latency in audio.

The good news is that you can get rid of latency by using different methods. Remember, if the latency is around 10 milliseconds or less, it is considered as an acceptable value, as it does not disrupt the recording process. Nevertheless, it becomes observable when latency exceeds 10 ms and can easily disturb the overall recording experience.

Types of Latency in Audio

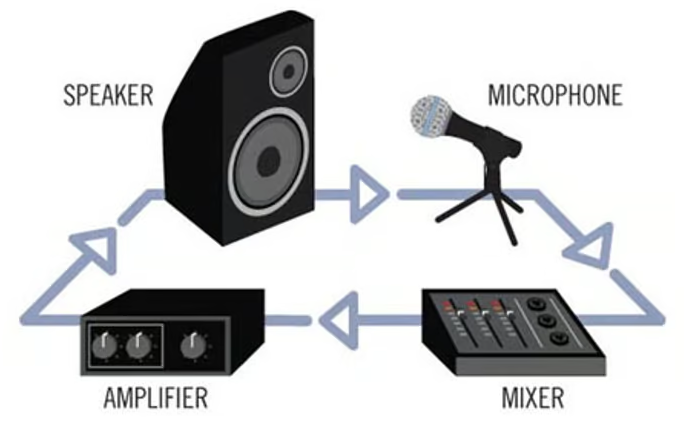

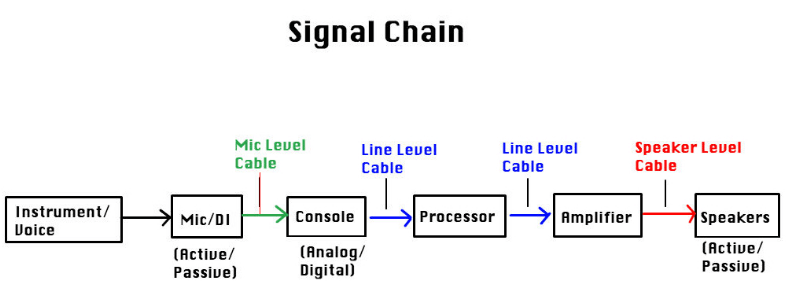

Latency in audio can occur at several phases throughout the journey of signals of any audio system. Latency is noticeable in different ways within these systems, and understanding its types is important for you if you are working with audio software or devices.

a. Input Latency

Input latency occurs when there is a delay between audio signal generation and recording. For instance, if you are playing an electric guitar and experience a noticeable delay before the sound of the chords you produce is recorded, that is the input latency.

Input latency is of high concern in live performances and studio recordings, where artists need to hear their instruments in real-time in order to maintain perfect timing. So, imagine what if you are playing a solo that comes with a delay and messes up with the entire tempo of the band? All the musicians will be unable to play correctly and in rhythm just because of the input latency in audio.

b. Output Latency

The output latency is noticed when you observe a delay in the processed audio signal and its playback through output equipment like headphones or speakers. Output latency can create a lot of issues in live sound and monitoring performance.

For instance, a live musician equipped with in-ear monitors will experience a delay between their act and the sound coming into their in-ear monitors. This will make the performance disorganized, as the musician won’t be able to sync with other artists on stage or even with a backing track.

c. Round-Trip Latency

Round-trip latency is a necessary concept to understand in audio signal recording and processing. It describes the starting duration of the sound that enters the audio system through an instrument or microphone, undergoes recording or processing, and finally exits through output devices, such as speakers. It is the sum of the total time required to complete this entire process using both input and output latency.

For example, assume a musician is recording a track in a musical studio. When they say something or sing into the mic, the sound passes through wireless connections or cables to the audio processing device, such as DAW. These devices show a particular amount of processing delay. Once the sound is completely processed, it is sent to headphones or speakers for the artist to supervise their performance.

That means round-trip latency consists of both input and output factors. However, the difference is that in round trip, the processed sound is turned back to the artists and the time required for this return is referred to as round-trip latency. It is important that you mitigate round-trip latency to make sure you can hear the processed sound without delay.

d. Processing Latency

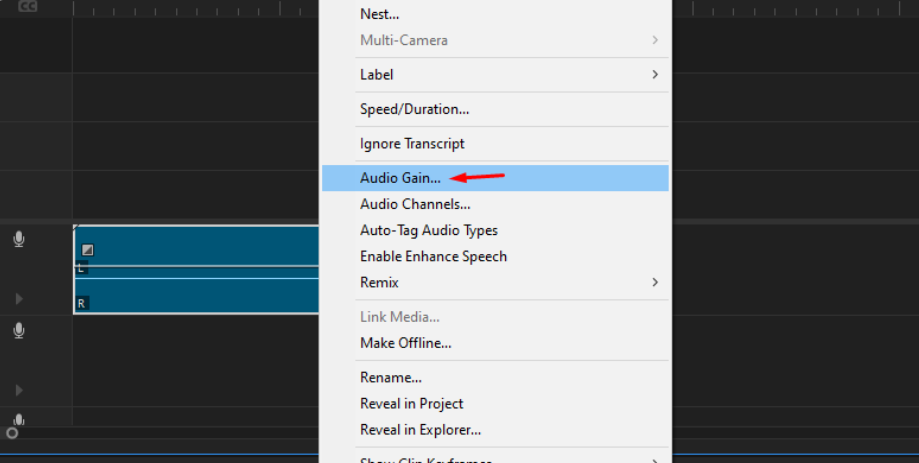

Processing latency is another leading cause of sound disruption. This happens when the audio signal is processed within software or hardware equipment. This manipulation can involve mixing, applying sound effects, or any audio alterations in real-time.

For example, suppose you apply echo, EQ, or reverb effects to an audio signal. In that case, you may possibly experience a slight delay before you are able to listen to the processed audio. This is called processing latency.

You must carefully administer processing latency in both live and studio performances to make sure that the processed sound aligns flawlessly with the original sound source and other components of your audio mix.

Causes of Latency in Audio

Latency in audio is a common problem that can negatively impact the overall quality of audio production and playback. So, let’s dig in to explore the major causes of audio latency.

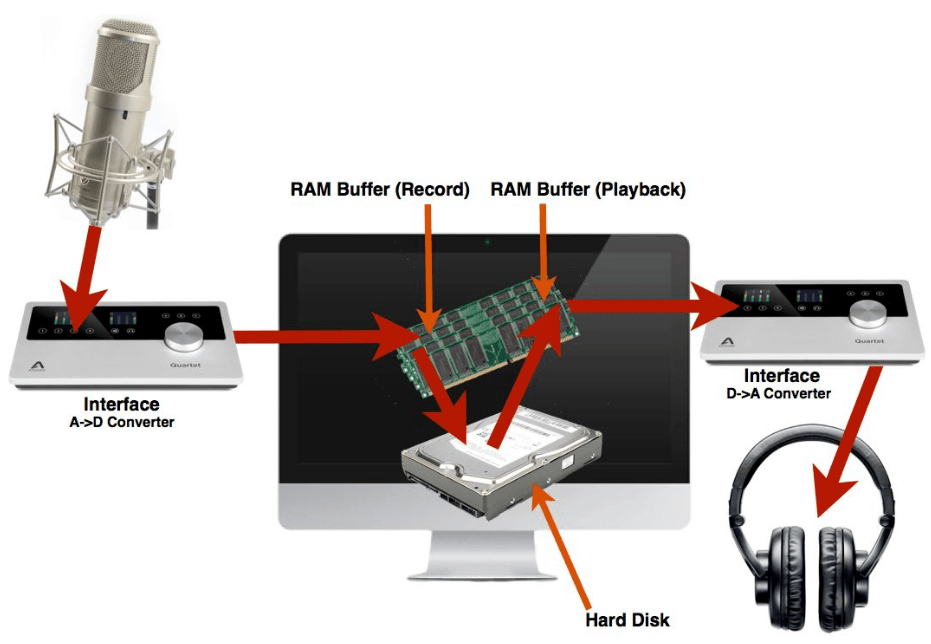

a. Buffering

When you record or process audio, the data is processed and stored in chunks known as buffers. In the field of audio processing, buffering is considered as one of the basic elements. But, the size of audio buffers can also cause latency. For instance, buffer in large sizes can mitigate system strain, permitting seamless audio data processing. But, at the same time, they also elevate latency, as they require more time to process big buffer sizes.

b. Bit Depth and Sample Rate

Bit depth and sample rate are important in examining audio quality, but they can also become the reason for latency. Higher bit depths and sample rates provide excellent audio fidelity but need extra processing time and power. So, when audio systems have to bear the high intensity of these components, it causes enhanced latency because the software and hardware have to work more than their capacity to playback and process the audio.

c. Software Processing

Audio plugins and digital audio workstations (DAWs) are important audio production tools. However, they can also cause latency in signal processing. The more complicated the processing chains or effects implemented to the audio, the bigger the delay you will experience. This issue is mostly found when you work with diversified effects and tracks within the same project.

d. Hardware Processing

One of the major reasons for latency in audio is hardware processing. This considers both digital and analog audio hardware, such as mixers, processors, and audio interfaces. When these devices send an audio signal, it undergoes several phases, such as conversion and routing. This conversion is the main phase where more time is taken and as a result, you get a noticeable delay.

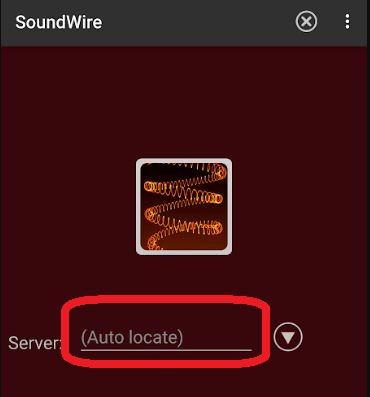

e. Communication Latency

Communication latency is widely experienced in scenarios like live streaming, collaboration, or any kind of network-based audio transmission. What happens is that when audio signals are transferred between devices or networks, delays occur because of packet routing, data transference, and/or other network-based factors. That is why communication latency is widely expected in online music collaborations and remote projects where producers and musicians may be in different cities, countries, or locations.

How to Measure Latency in Audio?

If you want to figure out and manage delay in audio, you need to understand ways to measure audio latency. There are three main methods to do it.

a. Clapping Test

One of the widely used methods to measure latency in audio is through monitoring and clapping examination. This process involves producing a sharp sound, such as clapping, and recording those sounds simultaneously. The delay between the recorded and original sound will help you calculate the latency in audio.

b. Analysis Software Programs

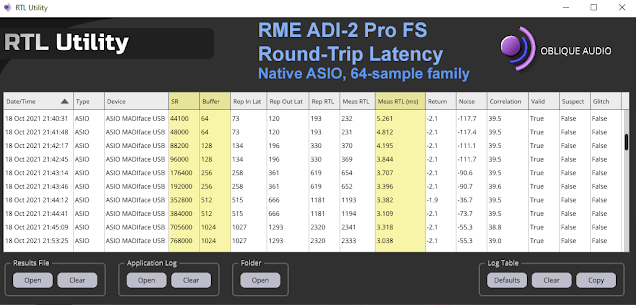

You can use dedicated software solutions, such as RTL Utility, to analyze audio latency by producing test signals and calculating the total time it takes for signals to be executed and played back.

c. Built-in Tools in DAWs and Audio Interfaces

Many DAWs and audio interfaces provide built-in tools and functions to measure latency that can accurately determine the input and output system latencies.

8 Ways to Mitigate Latency in Audio

Reducing audio latency is a vital concern in different applications, ranging from real-time communication to professional sound production systems. It is important to remember that audio latency creates poor user experience in various situations. So, here are 8 ways to decrease delay in audio.

1. Invest in High-Speed Cables

Always use low-latency, high-speed cables, such as Thunderbolt or USB 3.0, to make sure there is smooth data transmission between the computer and audio devices.

2. Have Powerful Processors

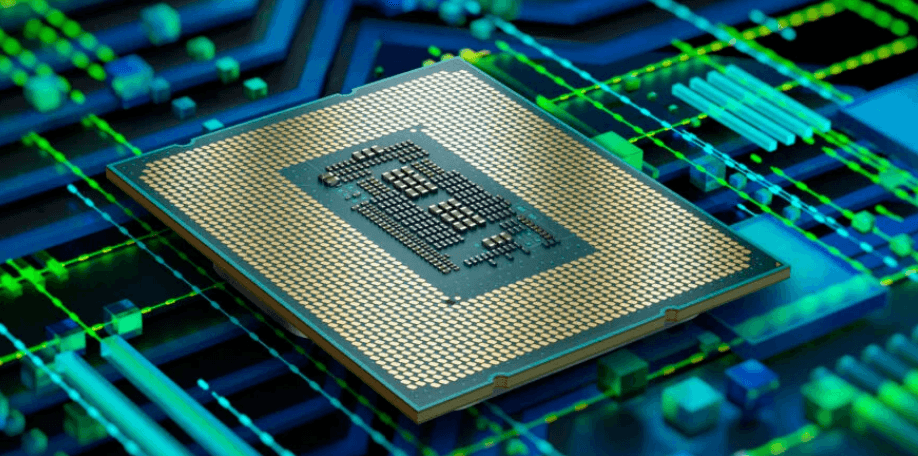

Use high-performance memory and processor units in your computer system to tackle audio-processing activities quickly, mitigating processing latency.

3. Improve Signal Chains

Enhance the signal chain by minimizing the number of connections and devices between the audio source and the output channel. Doing so reduces the overall audio latency.

4. Use ASIO Drivers

One of the best ways to reduce audio latency is through Audio Stream Input/Output (ASIO) drivers. These drivers offer low-latency performance by evading your computer’s audio processing, allowing you to savor direct communication between audio hardware and software.

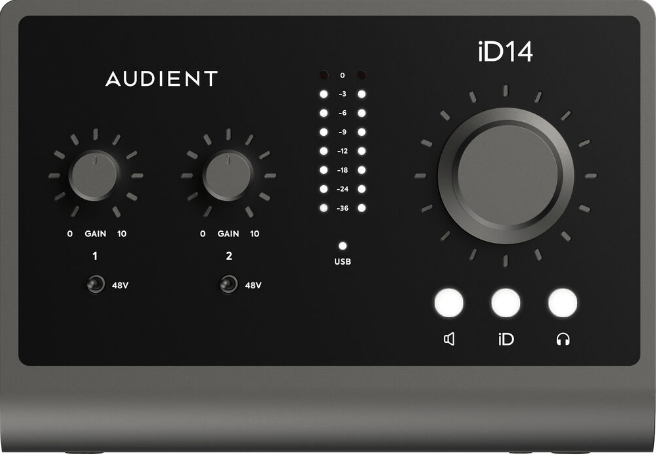

5. Use Top-of-the-Line Audio Interfaces

You can purchase top-notch audio interfaces that come with low-latency functionality. These interfaces are mostly equipped with advanced-level converters and use refined signal processing to reduce latency.

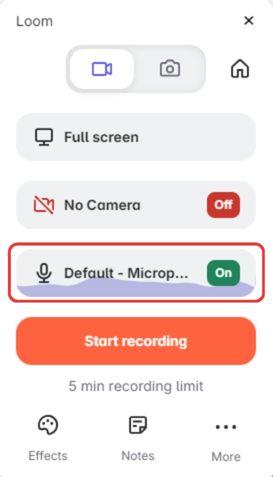

6. Monitoring Systems with Low-Latency

Find audio interfaces that have a direct monitoring system, as they permit you to hear yourself directly with the help of interfaces in real-time.

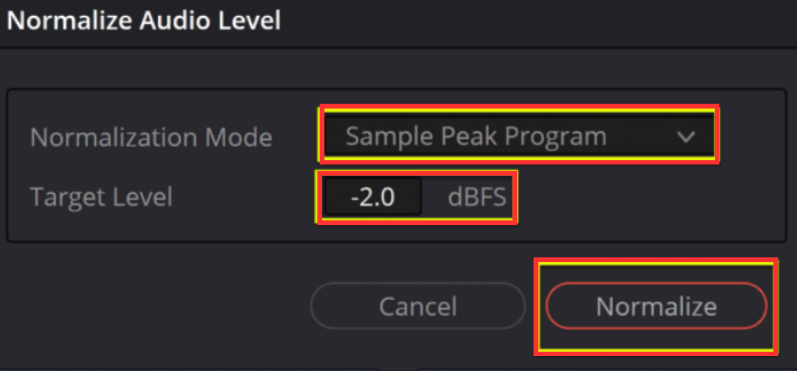

7. Buffer Size Expansion

Alter buffer size in your audio software to balance processing and latency. Remember, the smaller the buffer sizes, the better it mitigates latency. However, you will need to upgrade your processing power to make this happen.

8. Parallel and Multithreading

Use multithreading and parallel processing techniques in sound processing algorithms to divide the workload and decrease processing time, directly lowering the audio latency.

Conclusion

Latency in audio means the difference in the timing of the original sound produced and the recorded audio. If an artist records an instrument on a computer, but there is a delay in sound, it means the audio latency is present. However, experts believe that 10 milliseconds of latency is acceptable and is considered normal. But, if the delay intensity is more than the 10 ms value, you must find ways to reduce it.

To do that, first, you should understand what type of latency you are dealing with. Is it input, output, processing, or round-trip latency? Likewise, you must know the factors, such as hardware or software processing problem, buffering issue, or more, that causes a delay in sound. To make clear assumptions, you can also try audio measuring techniques, like the clapping method, software analysis, and more. Lastly, you can apply different ways, such as using high-quality hardware and software equipment, to mitigate latency in audio.

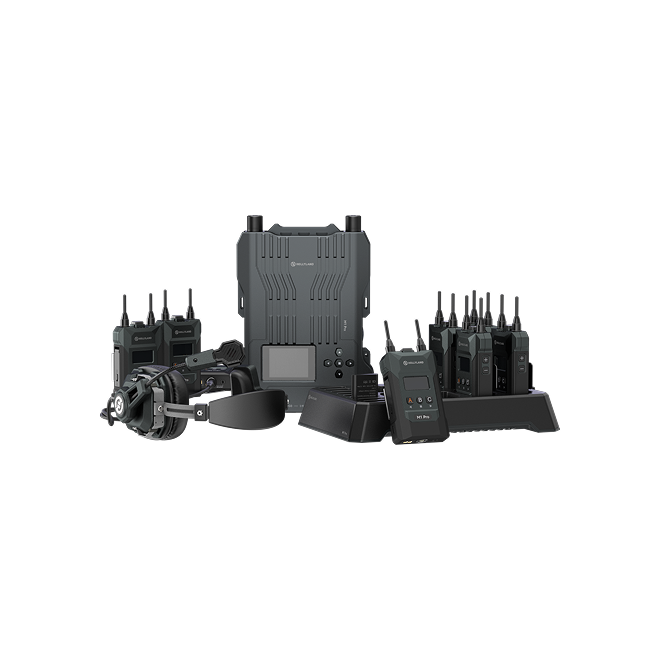

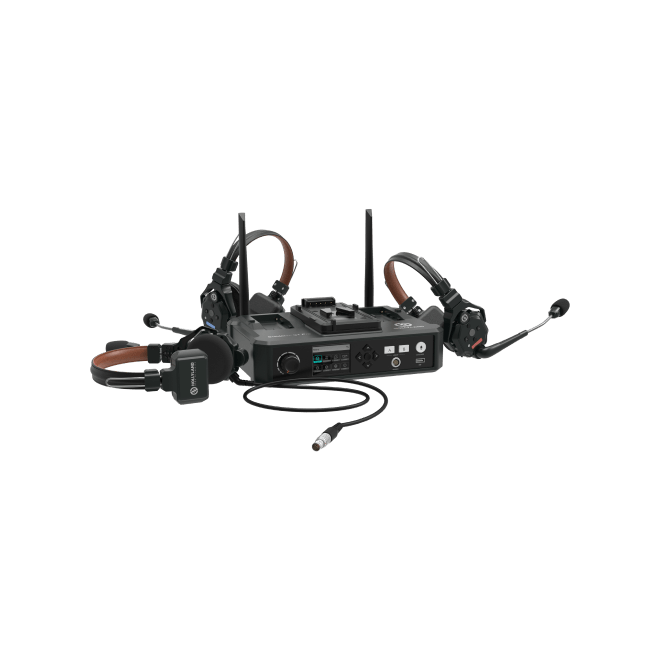

When dealing with audio latency during live events or video productions, having reliable communication equipment is crucial to maintaining timing and clarity. A wireless intercom system designed especially for events and productions can significantly reduce unwanted delays, ensuring your entire team is synchronized seamlessly in real-time.

FAQs

Q1. What is good audio latency?

A good audio latency is often considered to be 10 milliseconds or below.

Q2. How much latency is bad for audio?

If latency exceeds 10 milliseconds, it is said to be bad. It becomes noticeable and negatively impacts audio monitoring and processing in real-time.

Q3. How do we reduce audio interface latency?

You can reduce latency in the audio interface by using smaller sizes of buffers, optimizing your system performance, and choosing interfaces that come with low-latency drivers.

Q4. What is buffer size?

Buffer size means the amount of audio data that is stored temporarily in a buffer for further processing, and it is quantified in milliseconds or samples.

Q5. Does buffer size increase quality?

Smaller buffer sizes can help mitigate latency. However, they may also elevate the risk of glitches in your audio file. At the same time, large buffer sizes are best for enhancing your system’s performance but may cause noticeable latency, depending on the audio hardware and software.

.png)